One of the effects of the ongoing trade war between the US and China is likely to be the accelerated development of what are being called “artificial intelligence chips”, or AI chips for short, also sometimes referred to as AI accelerators.

AI chips could play a critical role in economic growth going forward because they will inevitably feature in cars, which are becoming increasingly autonomous; smart homes, where electronic devices are becoming more intelligent; robotics, obviously; and many other technologies.

AI chips, as the term suggests, refers to a new generation of microprocessors which are specifically designed to process artificial intelligence tasks faster, using less power.

Obvious, you might think, but some might wonder what the difference between an AI chip and a regular chip would be when all chips of any type process zeros and ones – a typical processor, after all, is actually capable of AI tasks.

Graphics-processing units are particularly good at AI-like tasks, which is why they form the basis for many of the AI chips being developed and offered today.

Without getting out of our depth, while a general microprocessor is an all-purpose system, AI processors are embedded with logic gates and highly parallel calculation systems that are more suited to typical AI tasks such as image processing, machine vision, machine learning, deep learning, artificial neural networks, and so on.

Maybe one could use cars as metaphors. A general microprocessor is your typical family car that might have good speed and steering capabilities. An AI chip is a supercar, which typically has a more powerful engine and super-sensitive steering, and a lower profile for less wind resistance, and so on.

In other words, staying within our shallow depth of intellectual capabilities, we are led to believe that there is definitely a difference between AI chips and their conventional counterparts.

Microcontrollers, as many readers might know, are a type of chip which is built to do a very limited number of functions – operate a washing machine, for example, or an automatic door, or something like that.

Microcontrollers are sometimes called application-specific integrated circuits. Or rather, they used to be. But the ASICs definition appears to be relevant to a broader range of chips now. And AI chips may actually be classed in that category, since they are highly specialized.

But that is the extent of our knowledge about the technology. It’s a complex subject, with its own rich jargon, so simplifying is not that easy, especially now that things are changing. More as we get it.

AI wars

Some might argue that the subtext of, or the really important issue in, the trade war between the US and China is indeed artificial intelligence.

It’s been said by many experts and commentators that whichever country can gain a lead in AI will be the most powerful economy going forward.

AI does, of course, have massive potential in the military, but we’ll try and stick to commercial and industrial sectors for now.

And we’ll also leave out quantum computing because that’s a few years away at least. But that, too, is another technology that the US is concerned about losing the lead in.

China’s progress in AI has been accelerated, possibly because of the government’s backing, but it’s difficult to see what politicians can do other than provide funding and other resources, along with propagandist proclamations.

If the funding and all the other things are available, as they are in both the US and China – and Russia, for that matter – it’s the technologists who, ultimately, will be the ones that make the progress.

On the point of Russia, it’s worth noting here that the country’s president, Vladimir Putin, has made comments on more than one occasion about the importance of AI.

“Artificial intelligence is the future,” said Putin in a recently televised panel discussion. “Whoever becomes the leader in this sphere will become the ruler of the world.”

Putin’s words might sound somewhat bombastic to some people, but it’s difficult to disagree with his sentiments or overstate the power of AI.

Most people, however, would probably prefer that the world moves towards a more diverse culture, a co-operative multi-culture, if you will, rather than one AI “god” ruling the world, as Putin appears to envision.

Or at least we would.

But that’s enough politics.

AI chips with everything

So now onto the companies that we think are the top developers of AI chips, although not in any particular order – just companies that have showcased their technology and have either already put them into production or are very close to doing so.

And, as a reminder, we’ve tried to stick the definition of AI chips that we outlined at the beginning of the article, rather than just superfast conventional microprocessors.

In other words, these microprocessors listed here are being described as “AI chips” and are specifically designed for AI tasks.

And just to provide a commercial context, the AI chip market is currently valued at around $7 billion, but is forecast for phenomenal growth to more than $90 billion in the next four years, according to a study by Allied Market Research.

1. Alphabet

Google’s parent company is overseeing the development of artificial intelligence technologies in a variety of sectors, including cloud computing, data centers, mobile devices, and desktop computers.

Probably most noteworthy is its Tensor Processing Unit, an ASIC specifically designed for Google’s TensorFlow programming framework, used mainly for machine learning and deep learning, two branches of AI.

Google’s Cloud TPU is a data center or cloud solution and is about the size of a credit card, but the Edge TPU is smaller than a one-cent coin and is designed for “edge” devices, referring to devices at the edge of a network, such as smartphones and tablets and machines used by the rest of us, outside of data centers.

Having said that, analysts who observe this market more closely say Google’s Edge TPU is unlikely to feature in the company’s own smartphones and tablets anytime soon, and is more likely to be used in more high-end, enterprise and expensive machines and devices.

2. Apple

Apple has been developing its own chips for some years and could eventually stop using suppliers such as Intel, which would be a huge shift in emphasis. But having already largely disentangled itself from Qualcomm after a long legal wrangle, Apple does look determined to go its own way in the AI future.

The company has used its A11 and A12 “Bionic” chips in its latest iPhones and iPads. The chip uses Apple’s Neural Engine, which a part of the circuitry that is not accessible to third-party apps. The A12 Bionic chip is said to be 15 percent faster than its previous incarnation, while using 50 percent of the power.

The A13 version is in production now, according to Inverse, and is likely to feature in more of the company’s mobile devices this year. And considering that Apple has sold more than a billion mobile devices, that’s a heck of a ready-made market, even without its desktop computer line, which still only accounts for only 5 percent of the overall PC market worldwide.

3. Arm

Arm, or ARM Holdings, produces chip designs which are used by all the leading technology manufacturers, including Apple.

As a chip designer, it doesn’t manufacture its own chips, which sort of gives it an advantage in perhaps the way Microsoft had an advantage by not making its own computers.

In other words, Arm is hugely influential in the market.

The company is currently developing AI chip designs along three main tracks: Project Trillium, a new class of processors that are “ultra-efficient” and scalable, aimed at machine learning applications; Machine Learning Processor, which is self-explanatory; and Arm NN, short for neural networks, a processor designed to work with TensorFlow, Caffe, which is a deep learning framework, and other structures.

4. Intel

The world’s largest chipmaker was reported to have been generating $1 billion in revenue from selling AI chips as far back as 2017. Actually, Intel is not currently the world’s largest chipmaker, but it probably was at the time.

And the processors being considered in that report were of the Xeon range, which is not actually AI-specific, just a general one that was enhanced to deal with AI better.

While it may continue to improve Xeon, Intel has also developed an AI chip range called “Nervana”, which are described as “neural network processors”.

Artificial neural networks mimic the workings of the human brain, which learns through experience and example, which is why you often hear about machine and deep learning systems needing to be “trained”.

With Nervana, scheduled to ship later this year, Intel appears to be prioritizing solving issues relating to natural language process and deep learning.

5. Nvidia

In the market for GPUs, which we mentioned can process AI tasks much faster than all-purpose chips, Nvidia looks to have a lead. Similarly, the company appears to have gained an advantage in the nascent market for AI chips.

The two technologies would seem to be closely related to each other, with Nvidia’s advances in GPUs helping to accelerate its AI chip development. In fact, GPUs appear to underpin Nvidia’s AI offerings, and its chipsets could be described as AI accelerators.

The specific AI chip technologies Nvidia supplies to the market include its Tesla chipset, Volta, and Xavier, among others. These chipsets, all based on GPUs, are packaged into software-plus-hardware solutions that are aimed at specific markets.

Xavier, for example, is the basis for an autonomous driving solution, while Volta is aimed at data centers.

Deep learning seems to be the main area of interest for Nvidia. Deep learning is sort of a higher level of machine learning. You could think of machine learning as short-term learning using relatively limited sets of data, whereas deep learning uses a greater amount of data gathered over a longer period of time to return results which are, in turn, designed to address deeper, underlying issues.

6. Advanced Micro Devices

Like Nvidia, AMD is another chipmaker which is strongly associated with graphics cards and GPUs, partly because of the growth of the computer games market over the past could of decades, and lately because of the growth of bitcoin mining.

AMD offers hardware-and-software solutions such as EPYC CPUs and Radeon Instinct GPUs for machine learning and deep learning.

Epyc is the name of the processor AMD supplies for servers, mainly in data centres, while Radeon is a graphics unit mainly aimed at gamers. Other chips AMD offers include the Ryzen, and perhaps the more well-known Athlon.

The company appears to be at a relatively early stage of its development of AI-specific chips, but with its relative strength in GPUs, observers are tipping it to become one of the leaders in the market.

AMD has been contracted to supply its Epyc and Radeon systems to the US Department of Energy for the building of what will be one of the world’s fastest and most powerful supercomputers, dubbed “Frontier”.

7. Baidu

Baidu is China’s equivalent of Google in the sense that it’s mainly known as an internet search engine. And like Google, Baidu has moved into new and interesting business sectors such as driverless cars, which, of course, need powerful microprocessors, preferably AI chips.

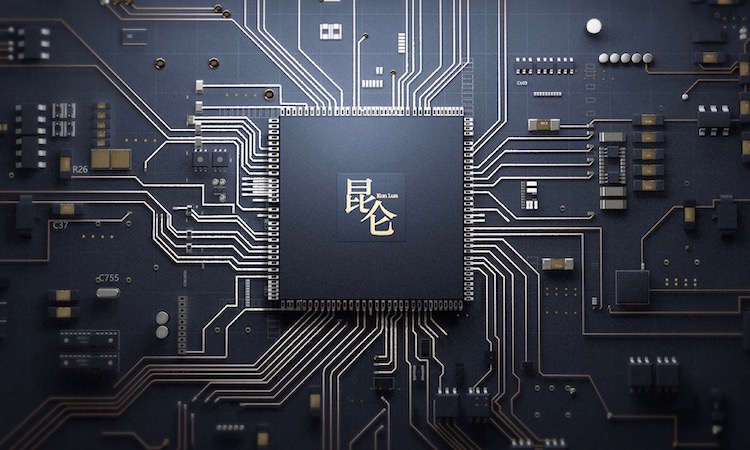

And to that end, Baidu last year unveiled the Kun Lun, describing it as a “cloud-to-edge AI chip”.

The company sees Kunlun’s application mainly in its existing AI ecosystem, which includes search ranking and its deep learning frameworks. But the chip has other potential applications in the new sectors Baidu is going into, including autonomous vehicles, intelligent devices for the home, voice recognition, natural language processing, and image processing.

8. Graphcore

After listing seven relatively long-established companies whose main activities are not actually geared towards developing AI chips, we arrive at Graphcore, a startup company whose central aim is to build and supply AI chips to the market.

The company has persuaded the likes of BMW, Microsoft and other global names to invest a total of $300 million into its business, which is now valued at around $2 billion.

The company’s main product right now appears to be the Rackscale IPU-Pod, based on its Colossus processor and aimed at data centers, although it may create more with the mythical amount of money invested, as that’s where its future lies.

The “IPU” stands for intelligent processing unit in this context.

9. Qualcomm

Having made a ton of money through its association with Apple from the start of the smartphone boom, Qualcomm probably feels left out in the cold by the tech giant’s decision to stop buying its chips. But Qualcomm itself is, of course, no minnow in its sector and has been making some significant investments with the future in mind.

Last month, the company unveiled a new “Cloud AI Chip”, and appears to be linking it to its developments in fifth-generation telecommunications networks, or 5G. These two technologies are thought to be fundamental to building the new ecosystem of autonomous vehicles and mobile computing devices.

Analysts say Qualcomm is something of a latecomer to the AI chip space, but the company has extensive experience in the mobile device market which would be helpful in achieving its stated aim to “make on-device AI ubiquitous”.

10. Adapteva

This is one of the more intriguing companies on this list, not least because of the Parallella, which is sometimes described as the cheapest supercomputing system available.

Adapteva’s main AI chip offering is the Epiphany, a 1024 core 64-bit microprocessor which is said to be a “world first” in many ways.

The Darpa-funded startup ran a successful Kickstarter campaign for its Parallella product and has raised more than $10 million in total investments.

Unfortunately, however, its website is currently difficult to access, which means we’re not sure what the status of their technology and company is, directly from the people concerned. But with so much interest in its technology, maybe they haven’t had the time to sort that part out.

11. Thinci

Robotics and Automation News reported on this company towards the end of last year, when automotive technology supplier Denso led a $65 million investment round in the startup.

We described Thinci as a developer of autonomous car technology, which it is, but it’s also interested in the unmanned aerial vehicles, or drones, market.

The company says its technology is currently installed in data centers, but not much more is known about its products. What it does say on Thinci’s website is that the company is developing hardware-plus-software solutions for machine learning, deep learning, neural networks, and vision processing.

12. Mythic AI

Having raised more than $40 million in funding, Mythic is planning to implement its “AI Without Borders” philosophy on the world, starting with data centers.

The company claims to have developed a method whereby deep neural networks no longer weigh heavily on conventional local AI because its system performs hybrid digital and analog calculations inside flash arrays, which it says is an “entirely new approach”.

Its GPU is capable of desktop computer performance but is the size of a shirt button, which means it can deliver “massive parallel computing” while being almost weightless.

All of which probably means its chips would improve the performance of edge devices without adding much weight or need for power.

13. Samsung

Having overtaken Intel as the world’s largest chipmaking company, and Apple as the world’s leading smartphone company, Samsung is looking to create entirely new markets that never existed before.

One of those markets is for foldable smartphones, although the company seems to have suffered a hiccup in that area because some units broke in the hands of reviewers.

But even regular smartphones will need more AI-capable chips, so its work in that area will not be wasted.

Towards the end of last year, Samsung released the latest version of its Exynos microprocessor, which is designed for long-term evolution, or LTE, communications networks.

Samsung says the new Exynos is equipped for on-device and enhanced neural processing units.

14. Taiwan Semiconductor Manufacturing Company

Despite being one of Apple’s main chip suppliers for many years, TSMC is not exactly a boastful company. Sure, it’s got a website and updates investors with results, but it doesn’t talk much about its actual work.

Luckily, news media such as DigiTimes keep abreast of the goings-on at the chipmaker and recently reported that e-commerce giant Alibaba has contracted TSMC – as well as Global Unichip – to build an AI chip.

We don’t know much else about this, but given the size of Alibaba and the business connections of TSMC, the order is likely to bring about significant changes in the AI chip market.

15. HiSilicon

This is the semiconductor business unit of Huawei, the telecommunications equipment manufacturer which is currently the subject of some indirect trade embargoes.

Basically, Huawei has been effectively banned from doing business in the US, and some European countries are now following America’s lead.

What this will mean for HiSilicon is probably too early to tell, but the company is not hanging around to find out. It recently launched Kirin, which is described as an AI chip in some media, but we’re not so sure.

Anyway, it’s probably an early stage in HiSilicon’s AI chip capabilities and the company will need to accelerate its efforts if it is to offset the increasing number of supply bans Huawei is facing.

16. IBM

No list of this type would be complete without at least one mention of IBM, which, as you might expect, has massively well-funded research and development into all manner of technologies, many of which are related to AI.

The company’s much-talked-about Watson AI actually uses what we’re calling conventional processors rather than AI-specific ones, but they’re quite powerful nonetheless.

In terms of specialized AI chips, IBM’s TrueNorth is probably in that category. TrueNorth is described as a “neuromorphic chip”, modeled on the human brain, and contains a massive 5.4 billion transistors, which sounds like a lot until you find out that AMD’s Epyc has 19.2 billion.

But it’s not all about transistor count and the actual number of components, it’s about how those components are used, and IBM is investing heavily in taking a central place in the AI chip landscape of the future.

17. Xilinx

Talking of the number of components, Xilinx is said to be the maker of the microprocessor with the highest number of transistors. Its Versal or Everest chipsets are said to contain 50 billion transistors.

Moreover, Xilinx does describe Versal as an AI inference platform. “Inference” is the term which refers to the deductions made from the massive amounts of data that machine learning and deep learning systems ingest and process.

The full Versal and Everest solutions contain chips from other companies, or at least ones designed by other companies. But Xilinx is probably one of the first to offer such high-power computing capabilities to the market in self-contained packages.

18. Via

Although Via doesn’t offer an AI chip as such, it does offer what it describes as an “Edge AI Developer Kit”, which features a Qualcomm processor and a variety of other components. And it offers us an opportunity to mention a different type of company.

It’s probably just a matter of time before AI is integrated into all the other low-cost, tiny computer suppliers, such as Arduino, Raspberry Pi, and others. One or two already pack an AI chip. Pine64 is one of them, according to Geek.

Apparently, it’s possible even now to develop AI applications using Raspberry Pi and Arduino, but we’d have to refer you to Instructables or a similar website if you want more information about that. It’s certainly worth keeping tabs on the sector.

19. LG

One of the world’s largest suppliers of consumer electronics, LG is a giant which seems to make some nimble moves. Its interest in robotics is evidence of this, but then, a lot of companies are looking to be ready for when smart homes let in more intelligent machines.

This website reported recently that LG has unveiled its own AI chip, the LG Neural Engine, with the company says that its strategy is to “accelerate the development of AI devices for the home”.

But even before it gets to the edge devices, it’s likely that LG will use the chips in its data centers and backroom systems.

20. Imagination Technologies

Virtual reality and augmented reality use up more computing resources than almost anything else that runs on a device. Some of Google’s data center servers were said to have been brought to a crashing halt during the global craze over the Pokémon augmented reality game a couple of years ago.

So VR and AR probably necessitate the integration of AI chips in the data center as well as in the edge device. And Imagination sort of does that with its PowerVR GPU.

Imagination describes the latest PowerVR as a “complete neural network accelerator solution for AI chips” which delivers more than 4 tera operations per second, making it “the highest performance density per square millimeter in the market”, according to the company.

21. MediaTek

Like most companies on this list, MediaTek is a “fabless” semiconductor company, meaning it doesn’t do the fabrication or manufacturing of the chips itself, just the design and development.

Its NeuroPilot technology embeds what it describes as “heterogeneous computing capabilities” such as CPUs, GPUs, and AI processing units into its system-on-chip products.

In this context, “system-on-chip” refers to the integrated circuit which connects all the components of a computing machine.

22. Wave Computing

This company could probably be described as a specialist AI platform provider. Last month, it launched the Triton AI, which is another system-on-chip.

Wave says Triton is an “industry first” that enables developers to address a broad range of AI use cases with a single platform. It supports inference and training, and is flexible enough to support new AI algorithms.

Wave offers a range of AI solutions that go from edge devices to servers and data center racks.

23. SambaNova

Having raised more than $200 million, this startup is very well resourced to develop custom AI chips for its customers.

Still in the early stage of its business, SambaNova says it is building hardware-plus-software solutions to “drive the next generation of AI computing”.

One of the company’s main investors is Alphabet, or Google. You’ll find that a lot of the big, established companies are buying into innovative new startups as a way to perhaps avoid being disrupted by them.

24. Groq

This company was set up by some former Google employees, including one or two who were involved in the Tensor project, and is said to be rather low-key.

Crunchbase reported last year that the startup had raised $60 million to develop its ideas, which are founded on its stated belief that compute’s next “breakthrough will be powered by new, simplified architectural approach to hardware and software”.

Not much else can be gleaned from its website, unless perhaps you’re an expert. But expect to hear more about this company going forward.

25. Kalray

This is a company Robotics and Automation News has featured in the past. One of its senior executives made a presentation and gave us an interview which can be viewed on our YouTube channel.

Essentially, Kalray is a well-funded European operation which appears to have produced an innovative chip for AI processing, in data centers and on edge devices. The company says its method enables multiple neural network layers to compute concurrently, using minimal power.

It appears to be particularly interested in intelligent and autonomous cars, which is understandable, given its close proximity to the giant German automotive industry.

26. Amazon

Having practically invented the cloud computing market, with its Amazon Web Services business unit, it seems logical that Amazon gets into the AI chip market, especially as its data centers could probably be made more efficient through their integration.

The world’s largest online retailer unveiled its AWS Inferentia AI chip towards the end of last year. It’s still yet to be formally launched, but even when it is, it’s unlikely to be sold to outside companies, just supplied to Amazon group businesses.

27. Facebook

Maybe we shouldn’t include this company on the list because it’s only just recently entered into an agreement with Intel on the development of an AI chip.

But Facebook has launched a number of innovative hardware products for the data center, so it’s probably worth watching what it does in the AI chip market.