Ever-increasing levels of vehicle autonomy are poised to revolutionize both personal and freight transport, reducing time spent sitting in traffic and ushering in new transportation modes such as robotaxis. Of course, autonomy and even ADAS (advanced driver assistance systems) requires vehicles to constantly have a clear picture of their surroundings. This creates a requirement for multiple sensors, and indeed multiple sensor types, to establish redundancy and compensate for deficiencies.

SWIR Imaging

While much of the discussion regarding sensors for autonomous vehicles centers around LIDAR and radar, there is also an extensive opportunity for other innovative image sensing technologies such as SWIR (short wavelength infra-red, 1000 to 2500 nm) imaging. The very high price of InGaAs sensors provides considerable motivation to develop much lower cost alternatives that can detect light towards the lower end of the SWIR spectral region. Such SWIR sensors could then be employed in vehicles to provide better vision through fog and dust due to reduced scattering.

One approach to achieve affordable SWIR imaging is to extend the sensitivity of silicon photodetectors beyond the usual 1000 nm by increasing their thickness and structuring the surface. Since this ‘extended silicon’ approach can utilize existing CMOS fabrication technologies, it’s likely to be a durable and relatively low-cost alternative that is highly applicable to autonomous vehicles and ADAS systems. However, since it is based on silicon, this technology is best suited to detecting light toward the lower end of the SWIR spectral region.

Another method, which will likely be more expensive to produce than ‘extended silicon’ but capable of imaging at longer wavelengths, uses a hybrid structure that comprises quantum dots mounted onto a CMOS read-out integrated circuit (ROIC). Quantum dots have highly tunable absorption spectra that can be controlled by changing their diameter, enabling light up to 2000 nm to be absorbed. Indeed, QD-on-CMOS hybrid detectors are already commercially available and capable of imaging up to 1700 nm. The ability to image at long wavelengths makes them especially promising for industrial imaging applications and potentially hyperspectral imaging.

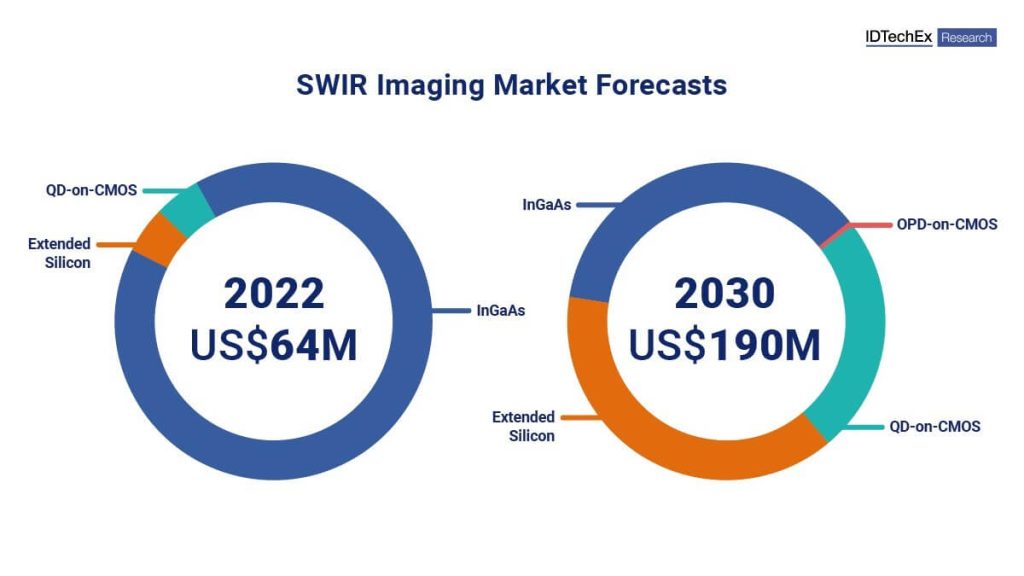

The emerging SWIR sensor market is forecast to grow over the next decade. This will be achieved through adoption in new applications enabled by its much lower price relative to InGaAs.

Event-Based Vision

Another technology that is gaining traction for autonomous vehicles and ADAS systems is event-based vision. This relies on detecting and recording time-stamping intensity changes asynchronously rather than capturing complete frames at a constant frequency. Relative to conventional frame-based imaging, event-based vision combines greater temporal resolution of rapidly changing image regions with much reduced data transfer and subsequent processing requirements.

Event-based vision offers significant advantages over conventional visible sensors in situations with sub-optimal visibility. By just detecting changes in the scene, pedestrians or other moving vehicles can be clearly identified since a complex background is not detected. Furthermore, since each pixel is triggered by the relative intensity change, event-based vision offers a significantly higher dynamic range that improves image detection at low light levels.

An Addition to the ‘Sensor Suite’

Emerging SWIR and event-based vision sensors are not going to replace the existing sensor types, specifically LiDAR, radar, and cameras, already established for ADAS systems. Rather, they will augment the capabilities of these existing sensor types as part of varied vehicle ‘sensor suite’. Integrating information from multiple sensor types covers the weaknesses of individual approaches, provides redundancy, and ultimately will enable safer and increasingly autonomous vehicles.